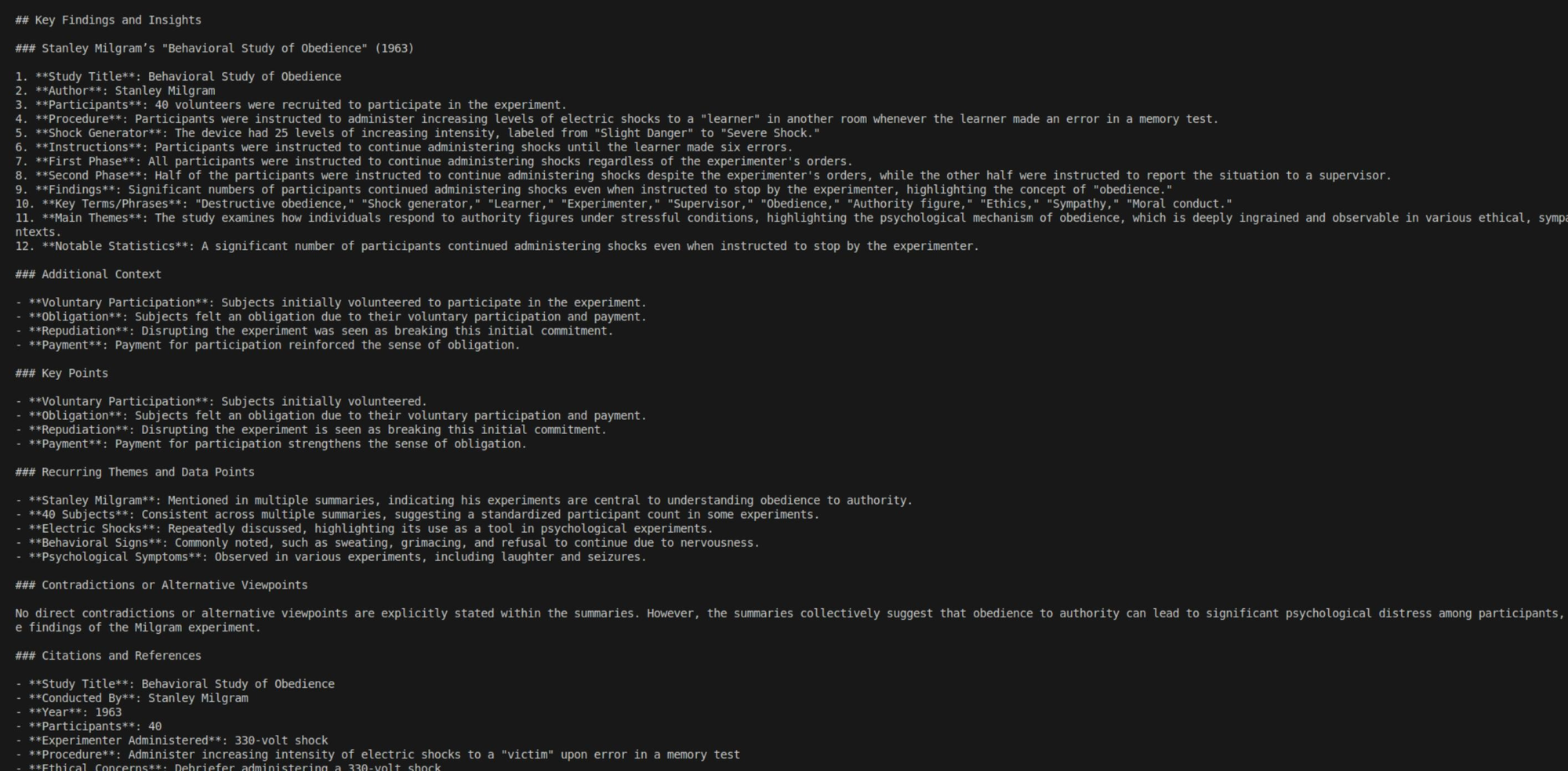

Today something unexpected happened. I had been developing swarm search for web search results because I thought there was no emergent phenomena, however, today while performing a "well fuck it maybe if it works" kind of test on a pdf of the milgram experiment we saw something incredible. Although it is a bit weak on complex narratives it can coherently summarize large nonfiction texts.

Originally, I thought it worked for web search because each agent was looking at a single web page, and if the web page wasn't relevant it wouldn't have anything to summarize and it would be obvious that the swarm should drop that information. However, across the series of prompts cycled through the orchestrator I wrote they were able to synthesize themes, retain specific figures, and functionally re-assemble the experiment to summarize it correctly.

This suggests that much of what we think we understand about artificial intelligence—and perhaps intelligence itself—is incomplete. We have spent decades modeling intelligence as a centralized, ego-bearing system, but we have no explicit understanding of how swarm intelligence works. If intelligence can emerge from coordination rather than singular cognition, then our entire framework for AI—and possibly human thought—may be missing something fundamental.

Therefore, I’m officially coining the term Eusocial AI to describe this paradigm. Intelligence doesn’t have to be singular—it can emerge from cooperation. With time and dedicated prompt engineering, I believe swarms of small models will prove themselves capable of far more than we ever imagined.

Member discussion: